Have you ever wondered how big systems, the ones with sensors everywhere, make sense of all the information they gather? It's a pretty interesting question, especially when you think about how much data comes in from things far away. Getting a handle on that information, perhaps what was happening just since yesterday, can really help you understand how things are going, you know?

When we talk about "remote IoT batch jobs," we're looking at a way to handle huge amounts of data from devices that are not right next to you. These devices, maybe a sensor in a far-off field or a machine in a distant factory, they collect lots of little bits of information. A batch job then takes all those little bits, usually from a set period, and works on them all at once, which is pretty efficient, you see.

This whole idea of looking at data "since yesterday" is quite practical, actually. It's not always about real-time, instant updates; sometimes, you just need to check in on what's happened over a specific time frame. It helps people see patterns, spot problems that might have built up, or just get a good overview of how things performed when they weren't watching every second, you know, in a way.

Table of Contents

- What Are Remote IoT Batch Jobs, Anyway?

- Why Focus on "Since Yesterday"? The Time Factor

- Who Cares About This? Our Audience

- A Look at the Data Flow

- An Example Scenario: Checking Yesterday's Data

- What Makes These Jobs Good?

- Some Things to Think About

- Tips for Doing It Right

- Frequently Asked Questions

- Looking Ahead with Your IoT Data

What Are Remote IoT Batch Jobs, Anyway?

So, a "batch job" is basically a computer program that runs without someone telling it what to do every single step of the way. It's like setting up a list of chores for your computer to do all at once, usually overnight or at a specific time. When we add "remote IoT" to that, it means these chores involve information from devices that are not in the same room, or even the same city, which is pretty cool, actually.

These devices, often called "things" in the Internet of Things, could be anything from a temperature sensor in a warehouse to a water meter in a distant village. They collect information, and instead of sending it all the time, or processing it on the spot, they store it up. Then, a batch job comes along and scoops up all that stored information for processing, which helps manage resources, you know.

The main idea is to process a lot of data at once, rather than piece by piece. This can save a lot of computing power and network use. It's a bit like collecting all your laundry for the week and doing it on one day, instead of washing one sock at a time, so to speak. This method is often chosen for tasks that don't need instant answers, but rather a good overview of past events, which is very common.

Why Focus on "Since Yesterday"? The Time Factor

The phrase "since yesterday" isn't just a random choice; it points to a very common need in the world of data. Many businesses and systems need to look at what happened over a specific, recent period, but not necessarily right this second. It's about getting a daily report, or seeing how things changed from one day to the next, which is quite important for many operations, you see.

Think about it: a farm might want to know the soil moisture levels from all its remote sensors over the last 24 hours to plan irrigation for today. Or a city might check its smart streetlights' energy use from the previous day to spot any odd spikes. This kind of historical look, just a day old, provides valuable insights without the pressure of real-time processing, which can be very demanding, you know.

This time frame helps with things like daily reporting, spotting trends that might have developed overnight, or making sure everything ran as it should have. It's a practical way to manage data without getting overwhelmed by a constant stream of information. So, looking at data "since yesterday" is a very common and useful approach, a bit like checking your diary from the day before, you know?

Who Cares About This? Our Audience

So, who finds this kind of thing interesting or helpful? Well, it's a pretty wide group of people, actually. If you're someone who works with data from far-off machines, like a system administrator keeping an eye on a network of sensors, this is definitely for you. You might be trying to make sure all those devices are sending their information correctly, or perhaps looking for any little glitches that popped up, so to speak.

Developers who build the systems that collect and process this data are also a big part of this audience. They need to figure out the best ways to get all that information, store it, and then run these batch jobs without a hitch. It's about writing the code that makes everything run smoothly, which can be a fun challenge, you know.

And then there are the people who make decisions based on this data, like business owners or operations managers. They don't necessarily need to know the technical bits, but they rely on those daily or "since yesterday" reports to understand how their remote assets are performing. They want to see if things are running efficiently, or if there's a problem brewing that needs their attention, which is pretty vital, you know.

A Look at the Data Flow

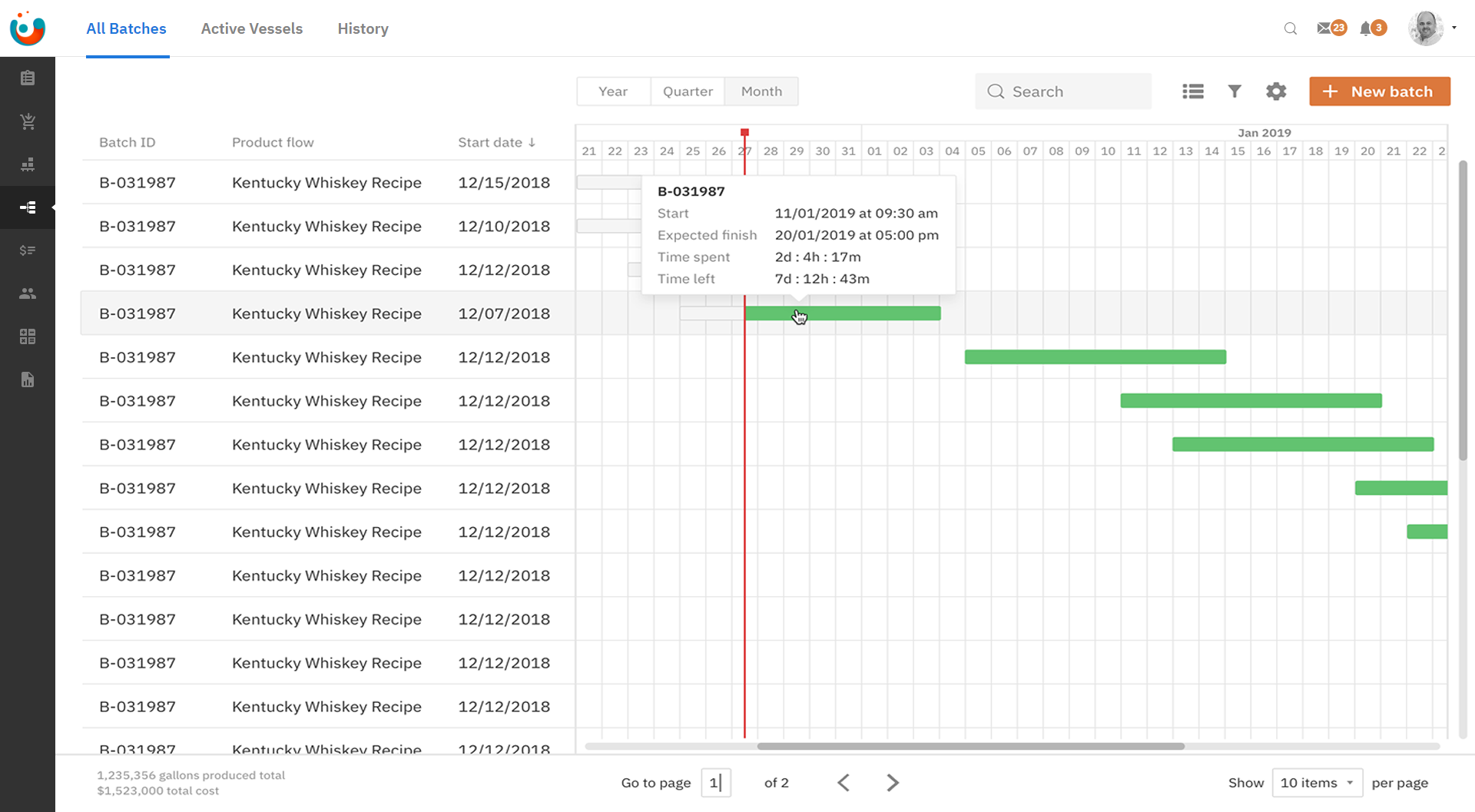

To really get how these batch jobs work, it helps to picture the journey of the data. First, you have the remote IoT devices, way out there, gathering information. These could be anything from weather stations in remote areas to smart meters in people's homes. They're constantly collecting bits of data, like temperature readings, energy usage, or movement detection, which is pretty cool, you know.

Next, this data needs to get somewhere. Often, it's sent to a central place, like a cloud server or a local data hub. It doesn't always go there instantly; sometimes, the devices store it up and send it in chunks to save power or network bandwidth. This storage part is really important, as it holds the information until it's ready to be picked up by the batch job, you see.

Once the data is sitting there, waiting, the batch job kicks in. It pulls all the data from a specific time period, like "since yesterday," and then starts working on it. This could mean cleaning it up, putting it into a more useful format, or running some calculations to find patterns or totals. It's like sorting through a big pile of papers to find the important bits, which takes a bit of work, you know.

An Example Scenario: Checking Yesterday's Data

Let's imagine a company that manages a large number of smart waste bins spread across a city. These bins have sensors that tell them how full they are, and whether they've been emptied. The company needs to plan its daily collection routes, and to do that, it needs to know which bins were full "since yesterday," and which ones were emptied, you know, to make things run smoothly.

The Setup: Getting Ready

Each smart bin sends its fullness level and emptying status to a central cloud platform every few hours. This data is stored in a big database. The company doesn't need to know the fullness level every minute, but they do need a summary for the entire previous day to plan today's truck routes. So, the system is set up to gather all this information, which is quite a task, you see.

They have a special program, a batch job, that's scheduled to run every morning at 3:00 AM. This program is designed to look specifically at all the data that came in from 12:00 AM yesterday to 11:59 PM yesterday. It's a bit like setting an alarm to check your mailbox at a specific time each day, just for yesterday's letters, you know.

The Job: Running It

When 3:00 AM rolls around, the batch job wakes up. It connects to the cloud database where all the bin data is stored. It then asks the database for every single record that has a timestamp falling within "yesterday's" full 24-hour period. This could be thousands, even millions, of data points, which is a lot to handle, you know.

Once it has all that data, the batch job starts to process it. It might filter out duplicate readings, calculate the average fullness for each bin throughout the day, or identify bins that reached a "full" threshold at any point yesterday. It also checks which bins reported being emptied, as this means they are ready for new waste, which is rather important for planning, you see.

The Outcome: What We Find

After the batch job finishes its work, it creates a neat report. This report lists all the bins that were full "since yesterday" and haven't been emptied yet, along with their last reported fullness level. It also highlights any bins that might have had unusual readings, like a bin that went from empty to full in just an hour, which could mean a problem, you know.

This report is then sent to the operations team. They use it to create the most efficient collection routes for their trucks for the day, only sending trucks to bins that actually need emptying. This saves fuel, time, and money, and it keeps the city cleaner, all thanks to a simple batch job looking at data from "since yesterday," which is pretty useful, you know.

What Makes These Jobs Good?

There are several good reasons why people choose to use remote IoT batch jobs, especially when looking at data from a specific past period like "since yesterday." For one, they are really good at handling a lot of information all at once. Instead of processing each tiny piece of data as it arrives, which can be quite taxing on systems, they wait until there's a big pile and then tackle it, which is efficient, you know.

Another big plus is that they don't demand constant attention. You set them up to run at a certain time, and they just do their thing in the background. This frees up resources and people to focus on other tasks, rather than constantly monitoring incoming data streams. It's a bit like having a reliable helper who takes care of a recurring task without needing to be told every time, which is very helpful, you see.

They also help save on costs, believe it or not. Processing data in batches can use less computing power and network bandwidth compared to always-on, real-time systems. This means you might need less expensive hardware or pay less for cloud services. So, for many uses, especially when looking at data from "since yesterday," batch jobs are a very smart choice, you know.

Some Things to Think About

While remote IoT batch jobs are quite handy, there are a few things to keep in mind. One consideration is that they don't give you instant information. If you need to know what's happening right this second, a batch job looking at "since yesterday" data won't cut it. There's always a bit of a delay, as the job has to collect and process all the information, you know.

Another point is dealing with errors. If a remote device stops sending data, or sends bad data, the batch job might not pick up on that until it runs. This means you might not know about a problem until the next morning's report, rather than right when it happens. So, you need other ways to monitor the health of your devices, which is pretty important, you see.

Also, setting up these jobs can take a bit of effort. You need to make sure the data is collected properly, stored in a way the batch job can understand, and that the job itself is programmed to do exactly what you need. It's not always a simple plug-and-play situation, and it might need some careful planning, you know, to get it just right.

Tips for Doing It Right

If you're thinking about using remote IoT batch jobs, especially for looking at data like "since yesterday," here are some thoughts to help you out. First, make sure your data collection is solid. The quality of your batch job's output depends entirely on the information it gets. So, check that your remote devices are sending good, clean data consistently, which is a big deal, you see.

Next, think about when you schedule your jobs. Running them during off-peak hours, like late at night, can help avoid slowing down other systems. This also gives you the "since yesterday" perspective nicely, as the data for the whole previous day will be ready. It's about finding the best time for your system to do its heavy lifting, you know, without bothering anyone.

Also, don't forget about keeping an eye on the batch jobs themselves. Set up alerts so you know if a job fails to run or if it takes much longer than it should. This helps you catch problems early, even if the data itself is from "since yesterday." It's like having a little alarm clock for your data processing, which is pretty helpful, you know.

You can learn more about IoT on our site to understand the basics of these connected devices. Also, you might want to discover more about data processing methods that go beyond just batch jobs, which is a good way to expand your knowledge, you see. For a broader look at how different industries are using data, you could check out resources like Forbes' IoT section, which has lots of interesting articles, you know.

Frequently Asked Questions

What is a batch job in IoT?

A batch job in IoT is a program that processes a large amount of collected data from remote devices all at once, usually at a scheduled time. It gathers information from a specific period, like "since yesterday," and then works on it together. This helps manage resources and get insights from many data points without needing constant, real-time updates, which is quite useful, you know.

Why would you process IoT data from "yesterday"?

Processing IoT data from "yesterday" is very common for daily reporting, spotting trends, or checking performance over a full day. It helps businesses understand what happened during a completed period without the pressure of live processing. This allows for efficient planning, problem detection that might have developed overnight, and general system oversight, which is pretty practical, you see.

How do remote IoT devices send data for batch processing?

Remote IoT devices usually send their data to a central storage location, often a cloud platform or a local server. They might send it continuously, or in chunks at set intervals, to save power or bandwidth. The batch job then connects to this storage, pulls all the relevant data from the specified time frame, like "since yesterday," and begins its work, which is how it all comes together, you know.

Looking Ahead with Your IoT Data

Thinking about how you handle data from your remote IoT devices, especially when you're looking back at what happened "since yesterday," is a pretty important step. It helps you make sense of all those bits of information and turn them into something useful. By using these batch jobs, you can get a clear picture of your operations without getting bogged down by every single data point, you know.

Consider how a regular check of "yesterday's" data could help your own systems run smoother. Maybe it's about spotting a pattern in energy use, or seeing if all your far-off sensors are still working as they should. Taking this step can lead to better decisions and more efficient ways of doing things, which is a good outcome, you see. It's really about making your data work for you, in a very practical way.

Related Resources:

Detail Author:

- Name : Marley Champlin

- Username : hirthe.ettie

- Email : jacobs.leila@yahoo.com

- Birthdate : 1985-02-22

- Address : 902 Bins Valleys Tiffanyside, CT 82974

- Phone : 657.929.4586

- Company : Leffler-Nader

- Job : Respiratory Therapist

- Bio : Corrupti labore minima et voluptas qui omnis. Assumenda voluptates nihil quia sapiente voluptatem. Labore cupiditate non quo. Sint eum voluptatibus nulla.

Socials

tiktok:

- url : https://tiktok.com/@oletasmitham

- username : oletasmitham

- bio : Sequi culpa odio non laudantium ab unde est.

- followers : 4328

- following : 1680

linkedin:

- url : https://linkedin.com/in/oleta_smitham

- username : oleta_smitham

- bio : Voluptatem quaerat odio odit est.

- followers : 5641

- following : 1277